Designing for a world where Agentic AI acts first

How does AI acting first reshape the way we design?

Agentic AI has been buzzing in both my offline and online worlds lately. The tech industry hit another milestone this year as AI continues to evolve—leaving many people either fascinated or fearful.

This piece comes from pure curiosity. I wanted to understand what Agentic AI actually is and how it is changing the way we design products, workflows, and experiences.

The goal of Design Desk Notes has always been to help you (and me) learn something new—and this topic feels like a big one.

The shift from reactive to agentic

Traditional AI relies on user input. You prompt, it responds. Agentic AI takes a step further: it acts autonomously, learns from context, and decides what to do next without waiting for you.

Think about the difference between ChatGPT, which is reactive and waits for prompts, and an AI assistant that schedules meetings, writes the email, and sends it for you—proactive and agentic.

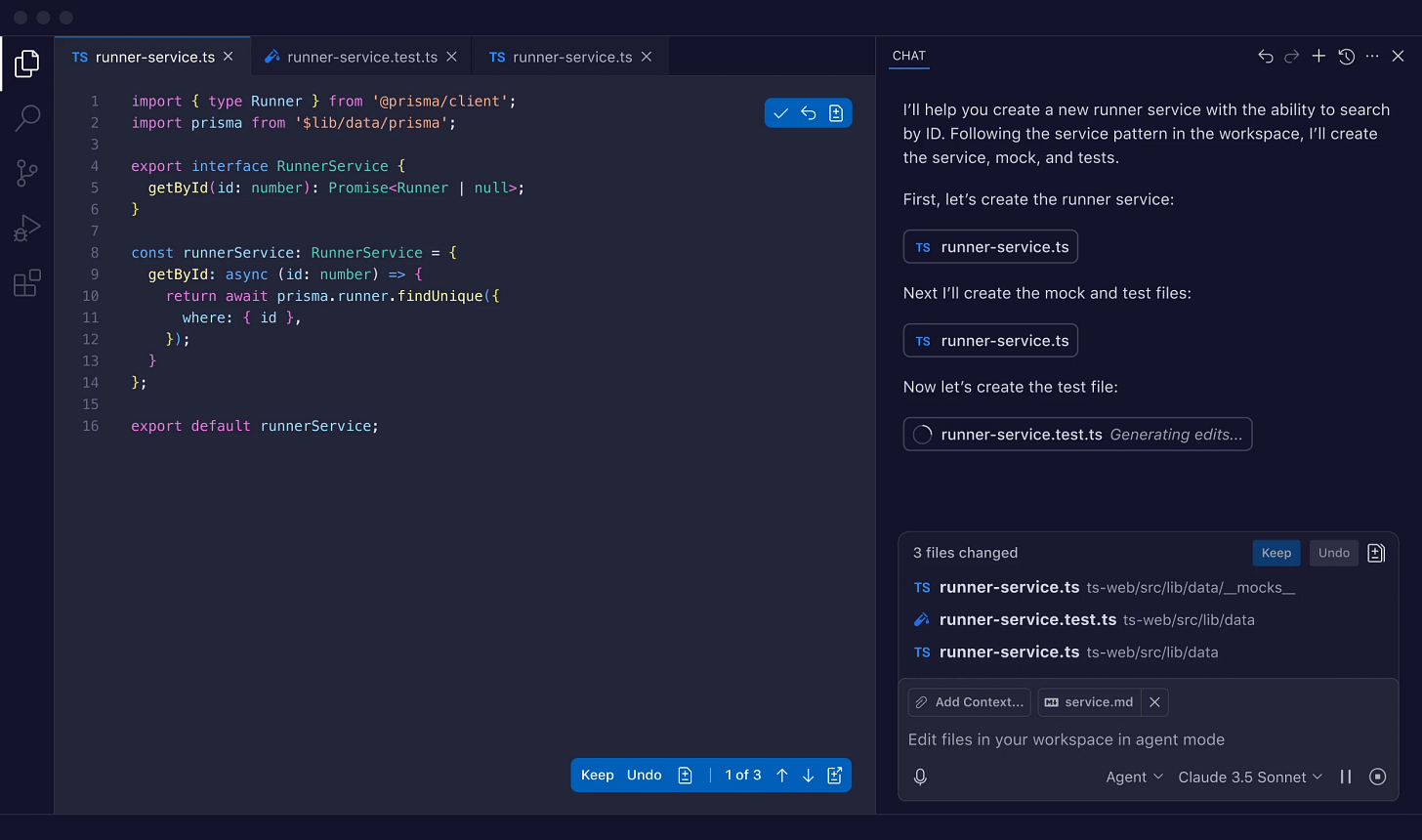

A few AI assistants on the market are already demonstrating what agentic behavior looks like. Superhuman writes and sends replies based on email threads, saving hours each week and making inbox zero actually achievable. Rewind.ai proactively summarizes calls, schedules next steps, and even helps backtrack design or development decisions. Reclaim.ai continuously reorganizes your calendar as priorities shift. And GitHub Copilot goes beyond suggestions—auto-creating tasks, commits, and pull requests without direct prompts.

With the help of AI assistants that analyze our habits and workflows, tedious repetitive tasks are being cut in half.

⋆ ˚。⋆୨୧˚

Psychology of trust and control

When AI begins to act on our behalf, it challenges one of the most fundamental principles of UX: User Agency.

User Agency gives users a sense of control, autonomy, and ownership over their actions.

What happens when we’re no longer in the loop? How do we build trust in systems that make decisions before we do?

As Designers, we will need to rethink reassurance—through intent transparency, reversibility, and clear boundaries of what the system can or can’t do. The new challenge is making something trustworthy.

Design considerations:

Transparency of intent: systems should show why they are acting and what they plan to do.

Reversibility & human oversight: allow the user to intervene, undo, or override. Builds comfort & trust.

Clearly defined boundaries: communicate what the system can do autonomously and what it will still ask for help on.

Calibrated confidence & uncertainty: showing “I’m X% sure about this action” or “I might need help” helps users trust rather than fear.

Build gradual trust & adoption: systems that start with lower autonomy and ramp up as trust grows tend to work better.

Maintaining user agency: users feel more comfortable if they feel “in control” even when the system is acting. That psychological sense matters a lot.

⋆ ˚。⋆୨୧˚

Designing new user journeys

In classic UX, the user initiates every step and the system reacts. But in an agentic world, that flow flips. The system anticipates, decides, and acts—turning the designer’s focus from static paths to dynamic, evolving journeys.

Imagine a project dashboard that notices an overdue task, reassigns it, and alerts the right teammate—all before you log in.

This changes the nature of design patterns—notifications become confirmations, permissions become proactive safeguards, and onboarding becomes ongoing adaptation.

We’re now designing with systems that think, not just for users who click.

As AI starts working ahead of us, our interfaces need to evolve from being command-driven (“Tell me what to do”) to collaborative (“Here’s what I’ve done — should I continue?”).

Take Notion AI, for example. It plans before you prompt. Notion doesn’t just respond—it observes how you organize, learns your habits, and starts to act preemptively. It’s not fully autonomous yet, but it’s quietly moving in that direction.

(Source: Notion AI)

This new design language is about negotiation. A back-and-forth that blends initiative with oversight. The goal: to keep humans comfortably in charge, even when machines move first.

⋆ ˚。⋆୨୧˚

Opportunities, risks, and what’s next

Agentic AI offers huge potential. It reduces friction, minimizes errors, improves time management, and enables workflows that once needed multiple tools or people.

But new technology brings new risks. Agentic systems can create agent errors, blur privacy and accountability, and widen the gap in understanding how decisions are made. There’s also the risk of over-reliance, with humans deferring judgment to machines that act confidently but not always correctly.

As designers, we need to prototype behaviors, not just interfaces, and keep asking: What should the AI do, and what should it never do without us?

I’m both excited and cautious. Excited by the creativity and efficiency this unlocks, yet mindful that we’re still responsible for the outcomes.

Agentic AI is changing how we design, decide, and trust. The more autonomous our tools become, the more intentional we must be in guiding them.

From my desk to yours, thanks for reading.

Sources & further reading:

Thanks so much for being here. If you enjoyed this, you might also like there you are, where I share reflections on growth, style, and life beyond design.

Come hang out with me on instagram.

—Janice Fong x